Challenges of New Encoding Scenarios: Reflections on Measuring Perceived Quality

In the ever-evolving landscape of video technology, new encoding scenarios present a set of new challenges in measuring accurately perceived quality. Accurate quality measurement is essential for assessment and even more for optimization, enabling us to fully exploit the potential of those advanced scenarios.

The evolution of modern codecs and the integration of artificial intelligence (AI) have paved the way for significant advancements in video compression and quality enhancement. However, traditional metrics used today for assessing quality, such as VMAF, may be inadequate for those innovative approaches.

The Rise of Film Grain Synthesis and AI in Modern and Future Codecs

Modern codecs like AV1, VVC, and LCEVC are at the forefront of the current technological shift in video compression. One of their most notable features is the native support for film grain synthesis (FGS). Film grain and sensor noise, elements that add a sort of natural and cinematic feeling to video content, are ubiquitous but have historically been challenging to compress. Traditional methods struggle to maintain the quality of film grain without significantly increasing the bitrate. This is particularly challenging with codecs like H.264 and H.265, where Film Grain Synthesis (FGS) support is not standard. In these cases, grain and noise must be treated as high-frequency details and processed using motion estimation, compensation, and block coding—just like any other moving or static part of the image. Handling these elements effectively is a complex task.

The innovative approach behind FGS is to generate film grain algorithmically during playback instead of trying to compress it. The logic is to measure and remove grain during compression, to later re-introduce it algorithmically during playback, transmitting only inexpensive parameters for the reconstruction.

This method drastically reduces the amount of information needed, making it possible to achieve high-quality film grain with minimal data transfer. This leap in compression efficiency “would be” a game-changer for the new codecs and one of the reasons that may push their adoption. I say “would be” because, currently, it is not widely used because of the aforementioned problems in quality assessment.

The reconstruction of the grain is, perceptually speaking, very pleasant and hardly discernible from the original, but pixel for pixel, the high-frequency signal is very different from the original, causing an underestimation of quality in full-reference metrics like PSNR, SSIM, and also, to a minor extent, VMAF.

In fact, VMAF is not fully reliable when grain retention comes into play, and so it’s currently difficult to guide the optimization efforts during codec or encoding pipeline tuning because it requires extensive and slow subjective assessments.

In many years of experience with this metric, some points of weakness emerged, in particular, an insensitivity to banding and to grain retention.

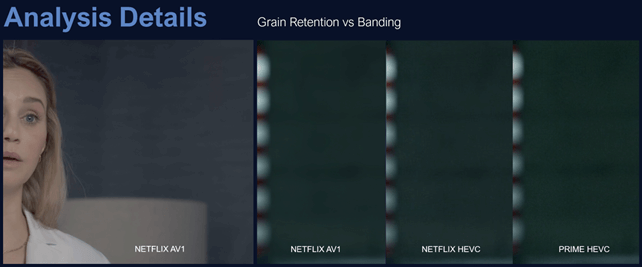

In Figure 1, you can see an example of excessive compression produced by Netflix with AV1. Notice that we are speaking of base AV1 without FGS, which Netflix has not yet used. Both AV1 and HEVC in the picture have a target VMAF of ~94, but the final AV1 result is plagued by banding, with a substantial elimination of high frequencies and grain. Globally, the AV1 encodings appear to have a plastic feeling: sharp edges but zero grain and emerging banding. If VMAF fails at assessing a proper grain retention, how could it assess the case of FGS? We definitely need a more reliable way to assess these scenarios.

Figure 1. Excessive compression produced by Netflix with AV1

AI-Powered Video Quality Enhancement and AI-based Codecs

Another transformative development is the use of AI models to enhance video quality. These AI models work by correcting artifacts, adding intricate details, and providing an apparent increase in resolution or a full, super-resolution upscale. This not only improves the visual experience but may eventually be tuned to enhance encoding efficiency. The ability to increase perceived quality while maintaining or even reducing data requirements is a significant breakthrough in video technology.

However, again, these advancements introduce complexity in quality assessment. Current full reference metrics like VMAF are designed to measure quality relative to the original source video. When AI-enhanced techniques introduce new details, correct artifacts (like banding), or modulate grain, these metrics may not accurately reflect the improved visual quality. In fact, they might even suggest a decrease in quality because of the introduced "distance" from the original source. For example, if AI reduces banding eventually present in the source or enhances a vanishing texture, it introduces something “new” that’s identified by traditional full-reference metrics as an encoding degradation/distortion, even if, perceptually speaking, it's an improvement.

In Figure 2, you can see on the left the original picture (A) and on the right the enhanced one (B). After encoding both at a given bitrate, we will have a higher quality result when starting from B compared to A, but if we measure the quality with a full-reference metric as a measure of the degradation of quality compared to the source, we may end up with quite different conclusions.

Figure 2. The original image is on the left, the AI-enhanced image on the right.

In Figure 3, there are other examples of AI enhancements and deep neural network (DNN) super-resolution that introduce or better “evolve” details and increase the apparent resolution and sense of detail. But again, to assess reliably those improvements, we need more “absolute” metrics capable of estimating the quality on an absolute scale and not as a degree of degradation from the source.

Figure 3. More AI-enhanced and DNN super-resolution images

An even more complex scenario of future hybrid or purely AI codecs poses new challenges in quality assessment and codec fine-tuning because those codecs will have completely new types of artifacts and distortions and may use generative techniques to create realistic textures and features at very low bitrates, features that will sacrifice fidelity to pleasantness and likelihood.

The Need for New Quality Metrics

VMAF is a popular and widely used metric today. It is based on four elementary metrics correlated to a predicted quality score using a Support Vector Regressor (SVR) trained using subjective quality scores collected with Absolute Score Rating (ASR) methodology. This last detail suggests a certain degree of flexibility in predicting quality, but while it has been successful in many applications, it does have certain limitations that can impact its effectiveness in specific scenarios.

Here are summarized some of the key limitations of VMAF:

- It is a full-reference metric, meaning it requires access to the original, uncompressed source video to compare against the encoded video. This may be a limit in some scenarios.

- It has been assessed with subjective data collected in “standard” viewing conditions; it lacks a more sensible/demanding model to intercept subtle or emerging artifacts.

- VMAF, while sophisticated, may not always accurately capture all types of video artifacts. For example, it has struggled with detecting specific issues like banding, blocking, or noise, which might not significantly impact the VMAF score but are perceptually noticeable to viewers.

- It is designed to assess traditional compression artifacts and may not be well-suited for modern encoding techniques that introduce new types of visual changes. For instance, with the advent of AI-enhanced video quality improvements and film grain synthesis, VMAF may not correctly assess these enhancements and might even penalize them as degradations.

- VMAF generally measures fidelity to the source video, which is not always synonymous with perceptual quality. Modern video enhancement techniques, like AI-driven super-resolution, can increase perceptual quality by adding detail or correcting artifacts, yet they may reduce fidelity to the original source. VMAF might not appropriately reflect these improvements, sometimes even indicating a lower quality score despite perceptual enhancements.

To address those issues, there is a growing need for objective metrics that can provide a more "absolute" quality score. Such metrics should evaluate the overall perceptual pleasantness of the video, rather than just fidelity to the source. This would allow for a more accurate assessment of the quality improvements brought by modern codecs, FGS, and AI enhancements.

One promising tool that I’m experimenting with is IMAX NR-XVS (formerly SSIMwave SVS, Now part of the IMAX StreamAware | ON-DEMAND suite). NR-XVS is a no-reference metric that estimates the perceptual quality of a video sequence without needing access to the source video. It utilizes a DNN to correlate video features with subjective scores on a frame-by-frame basis over an absolute 0–100 quality scale.

In the practice, XVS has demonstrated good sensitivity and linearity, making it a reliable tool for assessing video quality in scenarios where traditional metrics fall short. Before using XVS to assess video quality in no-reference scenarios, I studied the response and linearity of the metric measuring clips encoded with x264 and x265 at various resolutions and bitrates or constant rate factors (CRFs). The statistical distribution is illustrated in Figure 4.

Figure 4. SVS statistical distribution

The metric returned quite linear and proportional results to increases in bitrate and CRF, coherently with subjective scores. When applied to the cases of Figure 1, XVS is able to identify the lack of grain and the plastic “feeling” of Netflix AV1 (and HEVC to some extent) compared, for example, to the same content compressed by Amazon Prime (HEVC), showing a difference of more than 3 XVS points, that’s near a JND (Just Noticeable Difference).

In general, the metric is sensitive to the presence of banding, to a poor high-frequency domain, and to proper edge and motion reconstruction. It’s not perfect, but it’s very promising, and the underlying model will be able in future to estimate the quality of many device types.

Exploring Hybrid Quality Assessment Approaches

While XVS and similar no-reference metrics show great potential, there is also a need for hybrid approaches that combine no-reference and full-reference metrics. This could provide a more comprehensive quality assessment, balancing perceptual pleasantness with fidelity to the source. For instance, a weighted score that considers both absolute and relative quality could offer a more nuanced understanding of video quality.

Projects like YouTube's UGC no-reference quality assessment metric have attempted to address these challenges, but they often lack the accuracy and linearity required for the high-quality demands of OTT streaming services. Therefore, the development and adoption of reliable no-reference metrics, or hybrid systems, are crucial for optimizing new codecs, especially when film grain synthesis and AI enhancements are involved.

IMAX proposes the FR XVS metric with a similar hybrid approach. It is a full-reference metric, but in contrast to the “legacy” EPS, which is similar to VMAF in logic, FR XVS considers both source quality (NR XVS) and encoder performance (EPS) and accounts for information loss during encoding. This delivers a combination of source quality, full-reference quality EPS, and psychovisual effects in the model.

In the upcoming months, I plan to assess FR XVS to better understand if it is the solution to at least some of the problems we have discussed here.

Conclusion

The landscape of video encoding is rapidly evolving, driven by advancements in modern codecs and AI technology. These innovations offer tremendous opportunities for improving compression efficiency and visual quality. However, they also present new challenges in measuring perceived quality.

While VMAF has been a valuable tool for assessing video quality, its limitations highlight the need for complementary metrics or the development of new assessment methods. These new metrics should address VMAF's shortcomings, especially in the context of modern video encoding scenarios that incorporate AI and other advanced techniques. For the best outcomes, a combination of VMAF and other metrics, both full-reference and no-reference, might be necessary to achieve a comprehensive and accurate assessment of video quality in various applications.

Related Articles

This article explores the current state of AI in the streaming encoding, delivery, playback, and monetization ecosystems. By understanding the developments and considering key questions when evaluating AI-powered solutions, streaming professionals can make informed decisions about incorporating AI into their video processing pipelines and prepare for the future of AI-driven video technologies.

29 Jul 2024

Jan Ozer put EVC, VVC, and LCEVC through the paces, checking each for not only encoding quality, encoding complexity, and playback efficiency but also power consumption. Each one has its pros and cons; read on to find out how they all performed.

29 Dec 2021

Video encoding began as a one-dimensional data rate adjustment that reflected the simple reality that all videos encode differently is now a complex analysis that incorporates frame rate, resolution, color gamut, and dynamic ranges, as well as delivery network and device-related data, along with video quality metrics.

29 Jun 2021